I’m going to share with you a technique I’ve found for doing lazy, reliable, deterministic, constant-time infinite generation of tile based levels using Wave Function Collapse (WFC). But first, let’s cover some background, Modifying in Blocks, and lazy chunk based infinite generation.

Modifying in Blocks

Last time, we looked at a procedural generation technique called Model Synthesis, and in particular, focussed on an a technique it used to help scale out larger outputs, called “Modifying in Blocks”.

The idea is that that naive Model Synthesis or Wave Function Collapse gives us a generator with the following properties:

- It can reliably generate small outputs.

- It becomes increasingly unreliably when generating larger outputs

- Each run of the generator can be given a list of constraints, i.e. requirements about which tiles may appear where in the output.

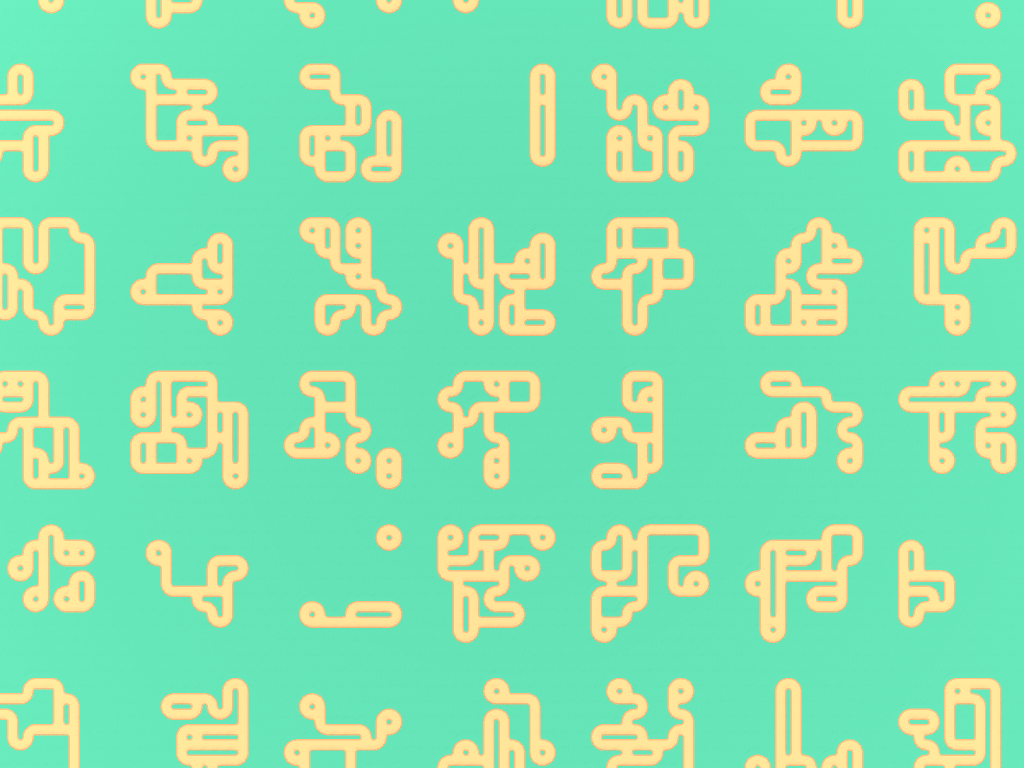

Modifying in Blocks helps us generate large outputs, by subdividing the output into small blocks. We generate the blocks one at a time, using the reliable generator, and constrain each block so that it joins up with the blocks that have been generated so far. Here’s what it looks like, for a simple tileset of orange path tiles that must connect to each other.

Note how the blocks actually overlap each other, so that each block replaces some of the tiles previously generated.

Lazy Chunks

As I was learning about this technique, I was also mulling over an unrelated problem, doing WFC generation with an infinite size output. Some algorithms, like Perlin Noise, are extremely easy to make work on an infinite plane. Perlin noise is deterministic, and you can evaluate any particular location independently of anything else. So you can make an “infinite” plane of perlin noise by just evaluating the finite area that the current user is looking at, and lazily evaluating more as the user scrolls / pans over the plane.

It’s much harder to do so with a constraint based generator like WFC. WFC works by evaluating cells one at a time, and it propagates constraints indefinitely far. So cells are not at all independent of one another, and you cannot work out what will be selected for a given cell without evaluating all the preceeding cells.

The typical way to resolve this is to run WFC in chunks. Each chunk is a separate evaluation of WFC, and we apply constraints from already evaluated chunks to ensure the whole thing joins together. When we can lazily evaluate chunks as the user moves.

This concept is best illustrated in Marian42’s excellent city generator, where you can clearly see the chunks being loaded in the distance.

Putting it together – Infinite Modifying in Blocks

Both of these techniques, Modifying in Blocks and Lazy Chunks, involve doing small generations at a time, and linking them together with constraints. Are these the same thing? Not quite.

Modifying in Blocks chooses the blocks in a fixed order, specifically a linear scan. Lazy chunks, on the other hand, chooses blocks that are near the player position to evaluate. But because each block depends on nearby already generated blocks, different evaluation orders give different results. Marian’s city generator is not deterministic – even using the same random seed, you’ll see different results.

So we need a well defined evaluation order to have deterministic results. But we also need a dynamic evaluation order, so that we only need evaluate things that are actually near the player, regardless of where they are or how they move.

It took me quite some time to find a solution that reconciles these two needs.

What I realized is that when we evaluate a block in Modifying By Blocks, it doesn’t depend on all the proceededing blocks. It depends only on blocks that contribute constraints, which means blocks that border/overlap it. In order to evaluate a given block, you don’t need evaluate every block that comes before it in the fixed evaluation order, you just first need evaluate its dependencies. To evaluate those, you need evaluate the dependency dependencies and so on, forming a tree (or DAG) of blocks that need evaluation.

So the trick is to design a fixed pattern of blocks where the dependency tree is always finite. That way, to evaluate any individual block, there is a fixed amount of work to be done. We can evaluate only the blocks that are near the player, while keeping determinism.

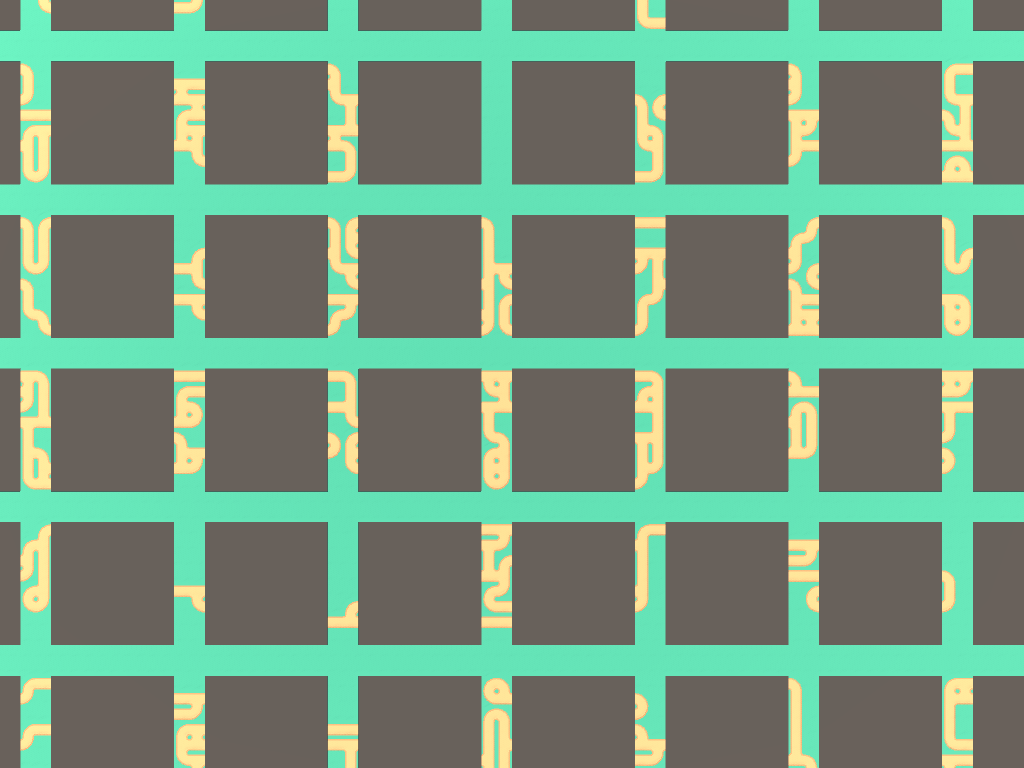

Here’s the particular evaluation scheme I chose for 2d, though there are several reasonable ones.

Layered Block Evaluation

First, I initialize the entire output to a known repeating pattern (this step is optional).

Then, define layer 1 as a set of blocks arranged in an infinite square grid, with a small gap between them. Because none of these blocks are near each other, they are all completely independent and can be evaluated in any order.

After the layer 1 blocks are defined, layer 2 uses the same grid, but offset 50% of the block width along the x-axis. Thus, each block in layer 2 overlaps exactly two blocks from layer 1, and can be evaluated after both of those blocks are done. Layer 2 blocks erase some of the tiles from the layer 1 blocks, and copies other tiles directly by using constraints.

Similarly, layer 3 builds on layer 2, just offset 50% along the y-axis.

Finally, layer 4 builds off of layer 3, offset 50% along the x-axis again. The result of the layer 4 blocks is the final output of the algorithm.

Because blocks in each layer only depends on 2 blocks from an earlier layer, they have a fixed dependency tree with depth equal to the layer number. To evaluate a layer 4 block, you first figure out the dependency tree, and compute all the blocks in it. Any other blocks can be ignored without hurting determinism.

I have chose to use 4 layers like this because, even though every layer uses a square grid with a gap between blocks, by layer 4, every tile has been replaced at least once, meaning it is very hard to to detect any trace of the pattern of blocks in the final output.

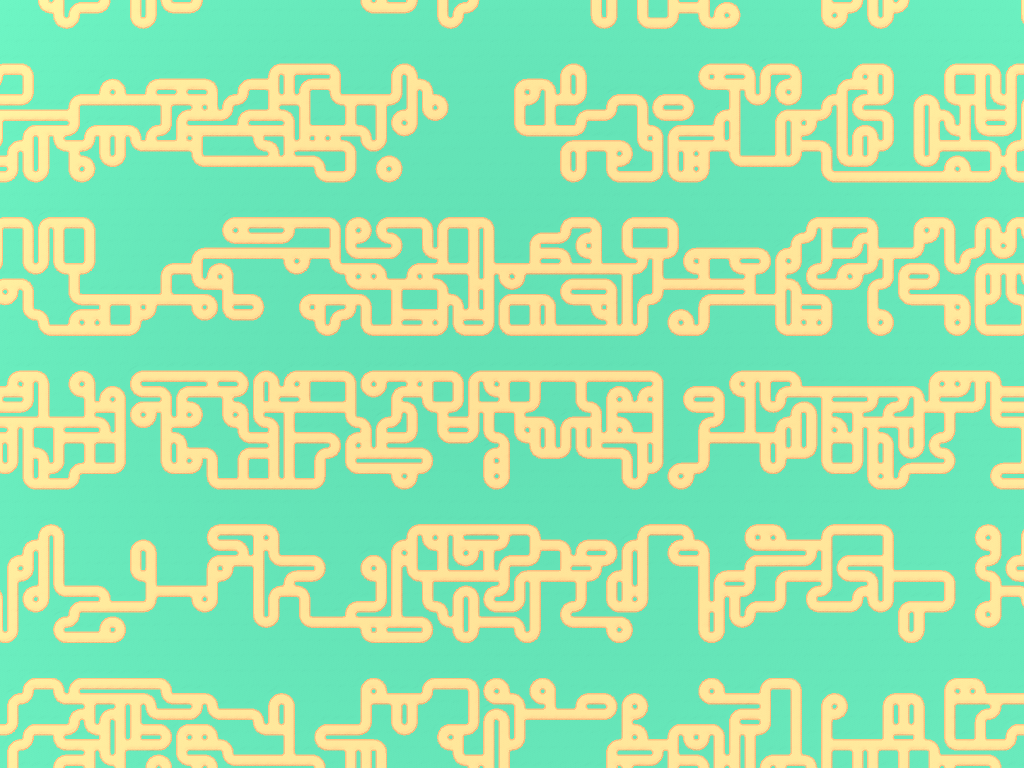

I think this slideshow illusratates the technique better than I can in words.

This layering system enables lazy generation, because layer 4 blocks can be evaluated independently from each other (though caching earlier layers can speed up the algorithm significantly).

The generation is deterministic, as the evaluation of blocks doesn’t depend on user input.

It’s constant time, as there’s a fixed amount of work to be done for each block of the output: Each layer 4 block needs a total of 12 blocks from earlier layers evaluated before it can be computed.

It’s also a robust technique – if a given block fails to evaluate, you can carry forward tiles from the earlier layers.

Shortcomings

While I think this is a subsantial improvements on the usual way of generating chunks lazily, it does have some problems.

Performance

Because of the layers, you are essentially doing 4x as much work as a simpler approach. Without caching, the multiple can be even higher.

Background Layer

Before starting on layer 1, I filled the entire area with a known good background, which serves to constrain the layer 1 generation. This is optional, as you can also run layer 1 without any constraints at all. But if you do so, you lose some of the robustness guarantees, as it’s no longer always possible to deal with generation errors by falling back to an earlier layer. Also, without those layer 1 constraints, it’s much easier for layer 1 to generate in such a way that causes issues for layer 2.

As usual, robustness for Wave Function Collapse boils down to the tileset – some are trivially easy, and some are NP-complete, and any technical solution can only help so much.

Chunkiness

While the repeated evaluations are quite good at hiding where the borders of chunks are, there are some things that this technique cannot cope with. Any patterns or special constraints that are naturally larger than the size of a block are simply impossible. This is unfortunate, but it’s only natural that arbitrarily complex constraints cannot be extended from finite regimes to infinite ones – there’s a whole body of academic literature about that.

At least for practical cases, increasing the size of the blocks is usually sufficient for getting rid of the most obvious block-based artifacts.