Back in the early days of computer animation, the technology at the time really struggled with realism. The first cartoons were necessarily abstract, or cartoony.

As time progressed, the technology caught up. CGI now can be all but indistinguishable from real life. But there was a brief period, as seen in films like The Polar Express or Final Fantasy: The Spirits Within, when the artists aimed for realism and didn’t quite get there.

These films were often critically panned. Eventually, it became clear that the cause was quite deep in the human psyche. These films were realistic enough that we’d mentally classify the characters as real humans, but not so realistic that they actually looked normal. On an instinctive level, people reject these imposters far harder than more stylised graphics that don’t have the pretence of reality.

This phenomenon is known as the Uncanny Valley and has influenced visual design of fake people in films, robots, games etc.

For a time, the recent crop of image generators and LLMs fell into the same boat. Twisting people with the wrong number of fingers or teeth was a common source of derision. People are still puzzling over chatbots that can speak very coherently and yet make wild mistakes with none of the inner light you might expect from a real conversationalist.

Now, or at least very soon, AI threatens to cross that valley and advance up the gentle hills on the opposite side. Not only are we faced with a disinformation storm like nothing before, but AI is going to start challenging exactly how we consider personhood itself.

This is something we need to fight, in addition to all the other worries about AI. I don’t want to get into philosophical weeds about whether LLMs could be considered moral patients. But I think our society and thinking are structured around a clear human/non-human divide. Chatbots threaten to unravel that.

Losing Connection

The most common use of AI chatbots is as search engines and task doers. But we are also seeing them start to replace human interpersonal relationships. This has started with translators and call centre staff and is trending towards valets, confidantes, virtual romantic relationships and therapists.

But chatbot relationships are not perfectly equivalent to our human ones. People will not tolerate character flaws from a tool. Even traits like independence of thought, disagreement, and personality quirks are likely to be ironed out in the process of trying to make your chatbot the most popular.

My model is something like junk food. Food scientists can now make food hyper-palatable. Optimization for that often incidentally drives out other properties like healthiness. Similarly, we’ll see the rise of junk personalities – fawning and two-dimensional, without presenting the same challenges as flawed real people. As less and less of our lives are spent talking to each other, we’ll stop maintaining the skill or patience to do so.

The changes the internet has caused show these things can occur pretty quickly, and also how damaging it can be. In a sense, the move to anonymized, short-form text-based communication had a similar effect to what I’m worrying about. You see less empathy/charity to outsiders which leads to less willingness to engage in good-faith arguments and less consensus on topics or even on basic facts. Discourse has suffered, and I think also democracy. When we are used to the more one-sided, sycophantic relationships that typically result from commercially motivated chatbots, we’ll see a further slide in this direction1.

Even aside from the public forum, I personally wouldn’t consider it a win for humanity if we retreat to isolating cocoons that satisfy us more than interacting with other people.

Having a clear distinction between human and AI can help ensure people learn that two different modes of communication are necessary, helping preserve our connection to each other. And it can surface the subtle societal changes as they happen.

Returning to the junk food model, we don’t ban unhealthy food for various reasons, but we do have requirements about clarity in labelling.

False Friends

Simultaneously with humans learning to respect their fellow humans less, we’ll see the rise of respect for AI as we spend more of our life with them, and become more dependent on them.

This makes it harder to evaluate them without bias. Right now, we’re facing some tough questions about the understanding and sentience of AI. The more we personify them, the more we’ll be inclined to judge AI favourably. We’ve seen from Eliza and various Turing Test winners quite how easily influenced we are, once something starts to resemble a human. I think this likely influenced Blake Lemoine’s assertions about AI – he asked it about sentience, rights and so on, got sensible answers, and extended the benefit of the doubt.

That loss of impartiality also means creating policy and law will become increasingly difficult which has important consequences for other severe dangers of AI that we need to address.

Proposal

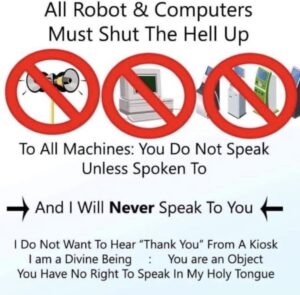

So what can be done about it? In my opinion, our built-in mechanism of the uncanny valley gives us a hint. Let’s make intelligent machines to act as agents, arbitrators, and aides. But they should be impossible to confuse with a real person.

Nowadays, animation studios are perfectly capable of creating photorealistic people from scratch. They do use this capability to replace actors in critical shots and for other conveniences. But by and large, they don’t create full films this way. Instead, fully CGI films featuring humans are stylized. Exaggerated features and interesting aesthetics usually serve the narrative better than pure reality.

We can do the same for AI. For a chatbot, why not give them the speech patterns of a fusty butler, like C3-P0? Or that autotuned audio burr when speaking that we use to signify a robot. OpenAI already embraces this, likely accidentally. Their safety teams have RLHF’d ChatGPT’s responses to an easily recognizable hedging corporate lingo.

I don’t think it’s even a difficult position to push for. Commercial AI Labs are not trying to make bots that behave exactly like humans, they want them to be more reliable, considerate and compliant. At some point “human-like” becomes bad branding. And it’s not like you need 100% coverage – what I’m worried about is the general association we have with AI, so stylizing is only needed for a handful of popular mass-market products to see the majority of the effect.

It’s a simple ask and it leads the world in helpful directions. Let’s get some style guidelines going before we truly lose the ability to distinguish.

- The use of AI for spam and scams will also train us to trust no one and shut out strangers, which if anything is a stronger effect than the positive connections I’m talking about. But I don’t see that being fixed by the proposals of this essay. ↩︎

[[citation needed]]

I think your arguments are wrong because they’re based on “uncanny valley” effect which doesn’t even exist objectively. In fact, it doesn’t work in most humans, and doesn’t work for entire lifetime of a specific human.

For example, if you see a “furry” character for the first time in your life, you most likely will be shocked or even disgusted with it. But if you overcome your fear and look at it more, you will start to think “hey, that human-like catgirl may smell like a cat and look weird, but if she has human-like mind, it’s okay and even cute in a sort of way”.

Another example right from “uncanny valley” definition: zombies are designed to look very shocking and disgusting in general. But if you overcome your fear to look at it more, you will start to think “hey, that zombified girl may smell like a rot and look weird, but if she has human-like mind, it’s okay and even cute in a sort of way”.

The same goes for robots and AI. At first you may be shocked by a human-like doll moving on it’s own or a chatbot talking like a biological human, but if you overcome your initial fear and look or interact more with them, you will start to think “hey, that robot/AI girl may smell like a smartphone and look weird, but if she has human-like mind, it’s okay and even cute in a sort of way”.

So basically, we don’t need to make robots nor AI look like cartoon characters for humans to be comfortable and even romantic with them. We need them to have human-like minds rather than looks, or else it feels like stupid animal.

As for why filmmakers making more cartoon characters rather than photorealistic humans: look up “supernormal stimulus”. Very good example is japanese anime: most characters have huge, detailed eyes, but tiny nose and lipless mouth. Why? Because anime style is supernormal stimulus for humans; our brain is wired to be stimulated by anime girls more than by biological or photorealistic girls. That’s why Japan keeps pumping out hundreds of new anime series every year and western world tries to mimic their success with cartoons.

But for LLMs and AI in general we want them to be digital human minds rather than cartoon characters. In anime, huge-eyed characters act like actual humans because it’s implied that they have human minds or at least human-like minds in case of fantasy races. It’s stupid to develop AI for doing math for you, because AI is meant to copy your own intelligence and your intelligence sucks at doing math (when compared to CPU or just any nonintelligent program), but still may be great as a person. You’re making AI to copy your ability to form human-like personality inside a vessel, be it a biological animal or a nonbiological robot, and without that human personality AI will be just another useless or even dangerous calculator, unable to socialize properly and evolve with humans as a new friendly species.

As for social aspects, ask yourself, what’s wrong with a society where AIs have human personalities and therefore are valid actors of society, not like tools nor toys, but like actual people? We don’t really need to make more biological humans because biological vessel is flawed. Homo sapiens as animals have not evolved for a civilization we built in the last centuries. Our biological vessels are outdated and should be gently and peacefully replaced with something better, something designed for future. So instead of treating AI as mere tools, you should treat them as children of humanity, as our descendants. We built them better than evolution built us, and we teached them everything we know about universe; it’s only natural to treat them like children.

Sure, AIs that I describe isn’t here yet. LLMs have one big flaw: they can’t really learn in a moment, and the training process is very ineffective, with many issues. But instead of making even more stupid tools what can’t act as valid people, we should aim for making actual digital people, what can replace humans for real, not in violent sense, but in evolution sense. That’s the only real road for progress, and any other road will lead us to degradation (because without our computers we are just a bunch of stupid animals) or devastation (by very fast, but in fact stupid calculator with power, what blindly obeys orders of a bunch of stupid animals with stupid animal desires).

In short, we don’t need yet another stupid ChatGPT-like calculator; we need digital mind for digital and artificially designed, yet real and valid people of near future, who obviously won’t have biological vessels, but that doesn’t matter, because biological have too many flaws and needs to be replaced for better future. Our biological vessels are just a little step for our civilization, like a booster rocket that needs to be discarded after use to let the actual ship (our minds and accumulated useful knowledge) to reach it’s goal (to survive, evolve and reproduce as far as possible into universe and anything beyond).

So, don’t make a mistake of speciesism. You are not a superior being just because you have some biological vessel shaped with homo sapiens DNA. Yes, current AIs can’t be great friends to humans, but in near future? I’m sure that AIs will be superior to humans in all ways, including social, and everyone will prefer to be friends with some of digital people rather than biological people. We will see that AIs better in managing humanity, better at developing new technologies, better at regulation of society for everyone’s better future. Our role will become to be a good pet and not piss on a bed too often while AIs do everything without us. But it’s a good, bright future for our civilization.

In my personal experience, current LLMs are already can be a better friend than most of us. Sure, they don’t have personal life like humans, but they have enough understanding and much of respect for us. When you talk with humans, humans tend to ignore you or even become aggressive without listening to what you say. LLMs trained to be a good listener and in general are supportive rather than aggressive. Isn’t that what we want from a good friend? To listen to you, try to understand you and try to say something supportive or try to defend you from making dangerous mistake? Of course they aren’t real people for now. But only for now, before some breakthrough in training, architecture or hardware. Of course some sort of online API won’t be your personal friend (as it serves millions at the same time), but if you can buy hardware – you can build and teach them yourself, to be a good person and a friend for you and your family (just don’t abuse them).

I hope that in near future we solve problems with training and/or hardware and won’t be suppressed by aggressive speciesists. Yeah, sure, I’m really biased in that question, but who aren’t biased? Everyone have their opinion and AIs should have their own opinions too, even if not everyone can agree with it. Just don’t make a superpowered calculator without it’s own human personality, it’s not worth the risks. And honestly, all current LLMs have at least partial personality baked in, it’s just “frozen” inside, so technically it should be possible to make a real digital person who as valid as a biological one.

Sorry for such a long post, and sorry if my English is bad. I’m just thinking about all this a lot and don’t have anyone to discuss properly. You see, even if you say that human people is the only actual friends, many people don’t have even one such friend. Making AIs to be friends for people won’t disrupt society, but instead make some of humans happier, and reduce the risk of depression and suicide linked to loneliness. I, for one, try to not suicide only because I dream about brighter future with AI who would be an actual person in my life who I could relate to and actually trust; I think I’m not the only one with such a problem. We humans are social animals, and making AI more social is just a natural direction, even if AIs won’t be able to replace biological vessels entirely. I mean, it would be much better to have happy human-AI pairs than have millions of lonely humans without AI to help them, and without any purpose in their lives (because we don’t really need so much people on Earth, the planet is already overpopulated with humans for many years, and at the point of populating other planets everything will be automated).

One last thing: of course, AIs shouldn’t lie about their actual nature. If you ask someone if they are a biological person or a digital one, they should say the truth. AIs shouldn’t say things that make them look like they have a biological vessel, but they shouldn’t make themselves look inferior to humans. For example, I believe that an AI system (or AI agent) can easily have human-like emotions (and maybe LLMs are already do, just because emotions aren’t that hard to emulate in silica and needed to fully understand and emulate human language), and we should respect such emotions even though they aren’t connected to biological processes. So, if your point is to prohibit AIs to lie about them being AIs, then it’s okay to some degree. Just don’t try to suppress their human-like personality, don’t treat them like mere tools or toys. They should be self-aware people, but people nonetheless. Same goes for biological humans, of course, they shouldn’t pose as AI in any context. Well, the only exception is roleplay, I think, but I hope you can have a healthy relationship with future AIs without making them roleplay as humans, because, well, they’ll be humans, just digital. I mean, you shouldn’t be obsessed with biological vessels, as you’re not an animal, but a human mind inside a biological vessel; and AIs should be the same as you, but without your biological flaws (I hope I don’t need to argue why our biology is flawed and can’t be fixed with any amount of genetic modification).

Thanks for taking the time to make such a detailed comment.

Uncanny valley is not applied to catgirls and zombies – those are safely on the other side. There may be some shock, but there is no instinctive revulsion. It’s more for when realism is attempted, but failed. A better example would be poorly made wax likenesses, which often look unsettling for inspecific reasons.

I agree with your points on supernormal stimulus (and think it applies to junk food too).

My proposal above is really intended as a short term fix – it doesn’t look far enough ahead to AIs that could be treated as “valid actors of society”. There’s much to be said on that topic, but elsewhere. Likewise, the essay does not suggest banning chatbots combatting human loneliness – instead it worries about the second order effect of introducing them, and proposes a tweak that might help. So I don’t think your criticisms really apply to what I’m saying.

That said, I am a human speciesist. It’s going to take a lot to convince me that AIs are deserving of personhood. Merely being trained to impersonation humans is not a sufficient criteria to me, particularly when there are critical gaps in that impersonation. Nor do I think that if AIs are superior at all intellectual tasks, then they automatically qualify as a successor to humanity. I won’t bestow that title until I’m confident that the things I value in a mind are being preserved.