Back in the early days of computer animation, the technology at the time really struggled with realism. The first cartoons were necessarily abstract, or cartoony.

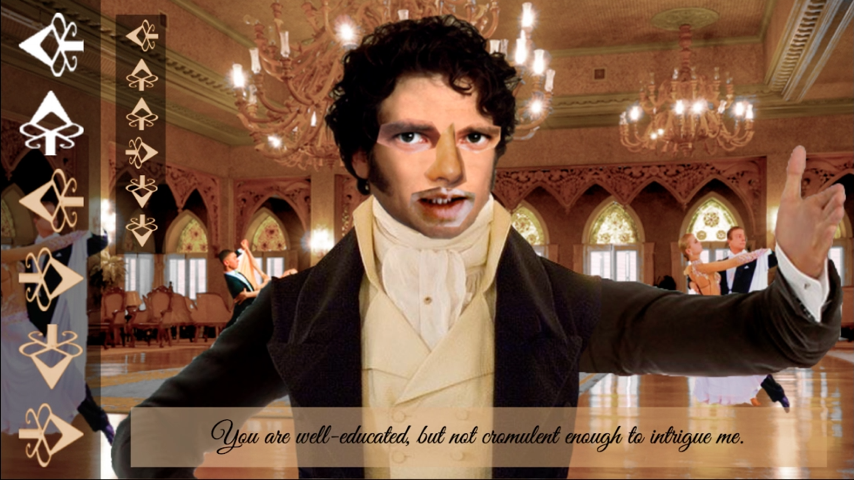

As time progressed, the technology caught up. CGI now can be all but indistinguishable from real life. But there was a brief period, as seen in films like The Polar Express or Final Fantasy: The Spirits Within, when the artists aimed for realism and didn’t quite get there.

These films were often critically panned. Eventually, it became clear that the cause was quite deep in the human psyche. These films were realistic enough that we’d mentally classify the characters as real humans, but not so realistic that they actually looked normal. On an instinctive level, people reject these imposters far harder than more stylised graphics that don’t have the pretence of reality.

This phenomenon is known as the Uncanny Valley and has influenced visual design of fake people in films, robots, games etc.

For a time, the recent crop of image generators and LLMs fell into the same boat. Twisting people with the wrong number of fingers or teeth was a common source of derision. People are still puzzling over chatbots that can speak very coherently and yet make wild mistakes with none of the inner light you might expect from a real conversationalist.

Now, or at least very soon, AI threatens to cross that valley and advance up the gentle hills on the opposite side. Not only are we faced with a disinformation storm like nothing before, but AI is going to start challenging exactly how we consider personhood itself.

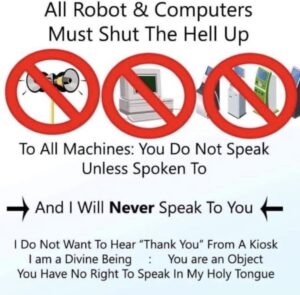

This is something we need to fight, in addition to all the other worries about AI. I don’t want to get into philosophical weeds about whether LLMs could be considered moral patients. But I think our society and thinking are structured around a clear human/non-human divide. Chatbots threaten to unravel that.

Continue reading →